Framework 196 The Human-Centred AI (HCAI) Approach

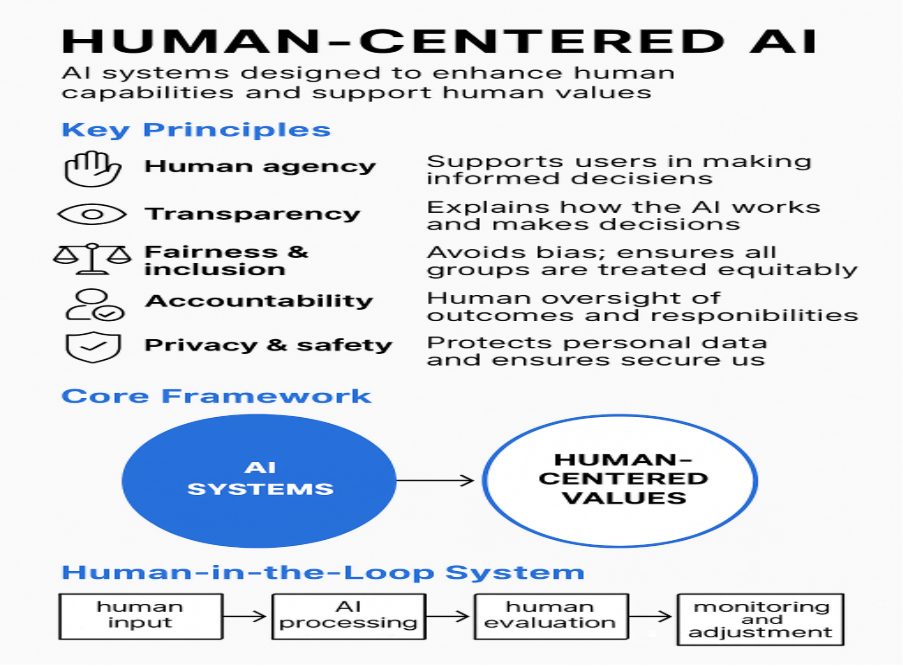

Human-Centered AI (HCAI) refers to the design, development and deployment of artificial intelligence systems that prioritize human values, rights and well-being. It ensures that AI technologies serve people by enhancing human capabilities, fostering trust and enabling equitable participation.

What Is Human-Centered AI?

Human-Centered AI combines principles from:

- ● Human-Computer Interaction (HCI)

- ● Ethics and social science

- ● Artificial intelligence and machine learning

The goal is not just to make AI smarter, but to make it supportive, accountable and beneficial to people.

HCAI often uses a "human-in-the-loop" approach where people are involved at different stages of an AI system:

[Human Input] → [AI Processing] → [Human Evaluation] → [Improved Output]

↑ ↓

[Feedback Loop] ← [Monitoring and Adjustment]

This structure enables adaptability, oversight and trust.

Why HCAI Matters

- ● Builds public trust in AI

- ● Protects human rights and agency

- ● Leads to better outcomes because humans and machines working together can outperform either alone

- ● Ensures AI advances support society’s goals, not just business efficiency.

Key Principles of HCAI

|

Principle |

Meaning/ Description |

|

Empowerment, not replacement |

AI should help people do their jobs better, not eliminate them. |

|

Ethical design |

Systems should be built with fairness, transparency & accountability. |

|

Trust and reliability |

People must be able to trust that AI will behave predictably & safely. |

|

Collaboration between humans & AI |

AI & people work together as a team, each doing what they do best. |

|

Inclusion & accessibility |

AI should work for everyone, considering diverse needs & perspectives. |

|

Human agency |

Supports users in making informed decisions |

|

Transparency |

Explains how the AI works and makes decisions |

|

Fairness & inclusion |

Avoids bias; ensures all groups are treated equitably |

|

Accountability |

Human oversight of outcomes and responsibilities |

|

Privacy & safety |

Protects personal data and ensures secure use |

How HCAI is Different from Traditional AI

|

Traditional AI |

Human-Centred AI |

|

Focus on maximum automation |

Focus on human-AI collaboration |

|

Efficiency & performance are top priorities |

Human dignity, well-being & control are top priorities |

|

Systems often work autonomously |

Systems are supervised, with clear human oversight |

|

"Replace the human" mindset |

"Assist the human" mindset |

Some Examples of HCAI

- ● Education (AI that helps teachers personalize lessons for students, ie adaptive learning system and keeps teachers in control of the classroom.)

- ● Healthcare (diagnostic tools that assist doctors by suggesting possible diagnoses but leave the final decision to the human doctor.)

- ● Workplace Tools (smart assistants, like writing aids or scheduling tools, that enhance, not take over, people's tasks.)

- ● Employment (fair, bias-checked AI tools in hiring)

- ● Urban planning (participatory AI tools used to engage communities in planning process)

Why It Matters

Without a human-centered approach, AI systems risk:

- ● Reinforcing social biases

- ● Acting in ways users can't understand or question

- ● Undermining human decision-making and autonomy

HCAI keeps technology grounded in the real, diverse needs of society.